Compiling WordNet on Windows to use with Emacs

As a non-native English speaker who reads, writes and reviews lots of English texts, I frequently look up definitions as well as synonyms of words. Of course there are numerous online sources available to do this, but I like to decrease my online 'footprint' due to privacy reasons. It also takes extra time to switch to a browser window and enter a search query.

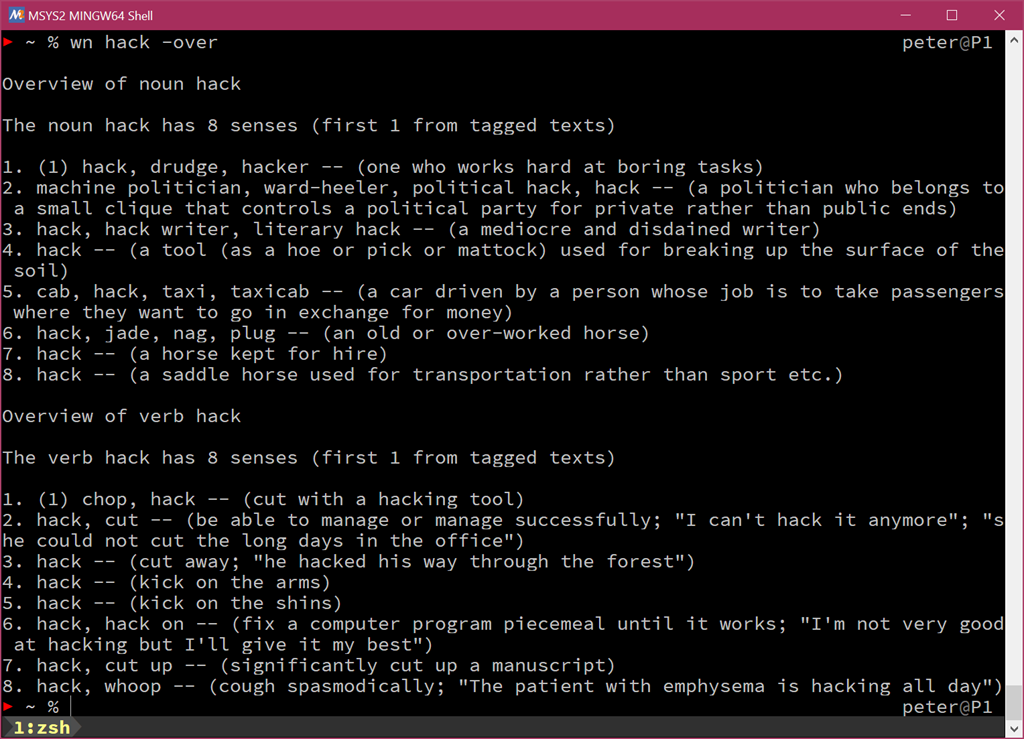

Fortunately the fine folks at Princeton University compiled WordNet [1], a large lexical database of English, which can be used offline - together with a tool to search that database. Even better, somebody wrote a package to use WordNet inside my favorite editor Emacs [2]. This means that just by hovering the cursor over a word inside Emacs, the definition as well as synonyms can be shown. The source code [3] is kindly provided by Princeton University.

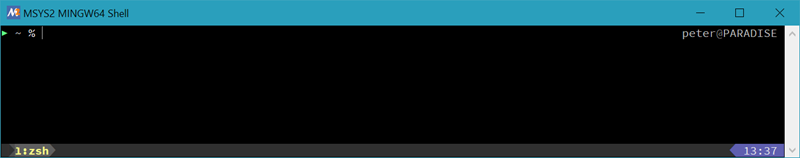

Compiling WordNet using MSYS2 on/for Windows

As is usually the case, compiling on/for Windows using the MSYS2 subsystem [4] can be done, with a few minor tweaks.

First, start a MSYS2 shell and install the required dependencies (build tools,

as well as the programming language

Tcl

and its widget toolkit

Tk

):

pacman -Sy --noconfirm base-devel mingw-w64-x86_64-tcl mingw-w64-x86_64-tk

Then …